EmbeddingGemma: Micro embeddings for mobile AI

A practical guide to Google's EmbeddingGemma: matryoshka text embeddings designed for phones, Raspberry Pi, and edge devices—plus code examples, benchmarks, and build tips.

AI and machine learning technologies, applications, and best practices

A practical guide to Google's EmbeddingGemma: matryoshka text embeddings designed for phones, Raspberry Pi, and edge devices—plus code examples, benchmarks, and build tips.

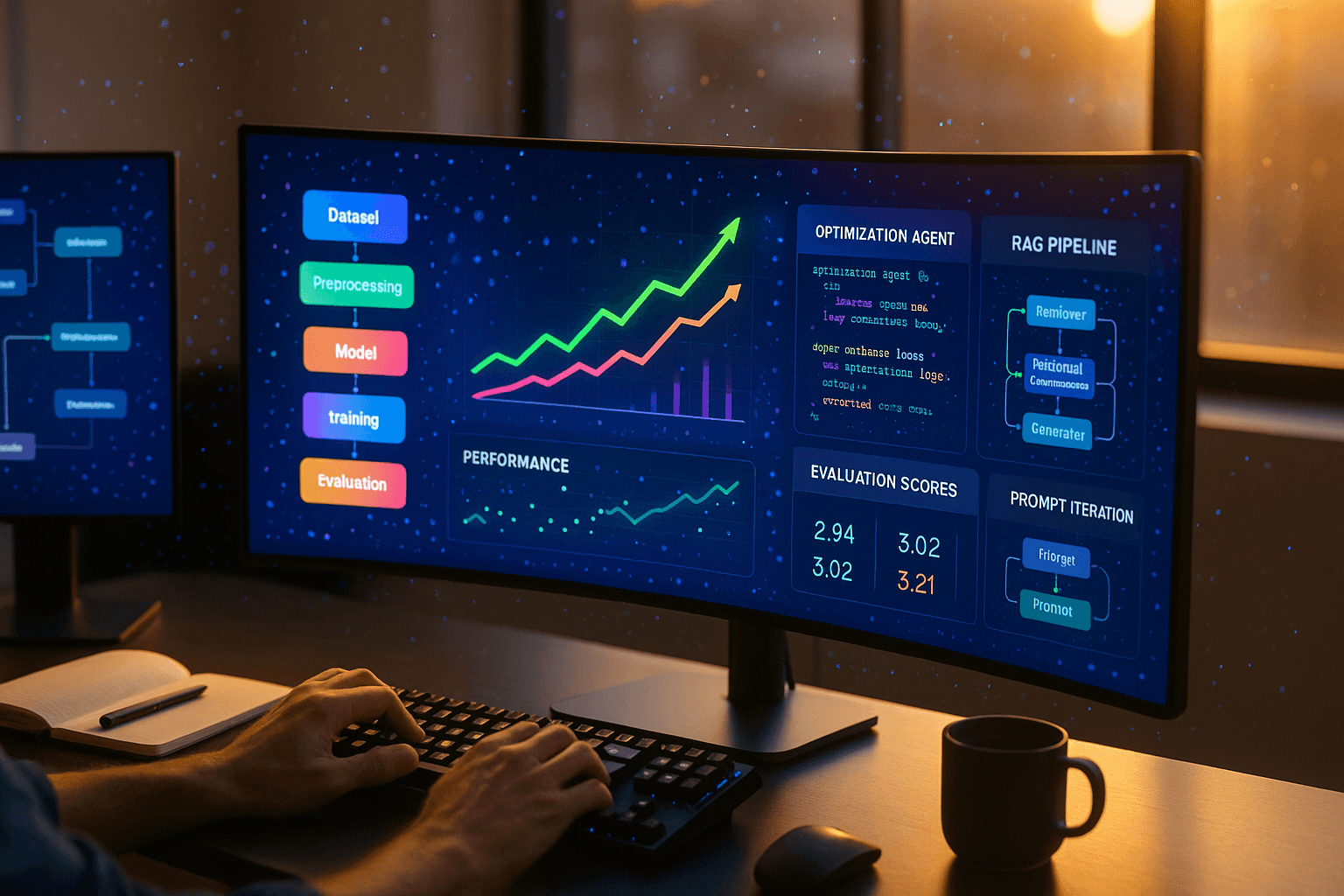

Learn how to build production-ready RAG systems with reliable retrieval, solid evaluation, smarter chunking, hybrid search, reranking, and fine-tuning—plus actionable patterns to ship with confidence.

Most enterprise data lives in PDFs, DOCX, and HTML. Here’s how Docling turns messy, unstructured files into clean, AI-ready context that improves RAG accuracy and reliability.

Build and ship internal knowledge and document-extraction apps in days, not months. A modular, Kubernetes-native approach with sandboxed iteration, LLM strategy, and a secure app factory.

Explore how Knowledge Augmented Generation (KAG) surpasses traditional RAG systems by integrating structured knowledge graphs with expert decision-making processes for enterprise AI applications.

Learn how RAG, fine-tuning, and prompt engineering improve LLM answers. See how they work, trade-offs, and practical steps to pick, combine, and implement them.

Discover how to build resilient AI systems that survive rapid model changes by applying software engineering principles and avoiding premature optimization in the age of weekly LLM releases.

A comprehensive comparison of OpenAI's GPT-5 and Anthropic's Opus 4.1 in a real-world coding challenge, building a functional Kanban task tracker to determine which AI model truly excels at software development.

An in-depth analysis of GPT-5's programming capabilities, benchmark performance, and whether it lives up to OpenAI's claims of PhD-level intelligence for developers.

Comprehensive analysis of ChatGPT-5's performance improvements, unified model architecture, and practical applications compared to previous AI models.

Learn how to build reliable AI applications using practical evaluation frameworks that move beyond laboratory benchmarks to real-world user scenarios.

Enterprise AI evaluation is poised for explosive growth as autonomous agents move into production and C-suite executives demand measurable ROI from AI investments.

Exploring the exponential growth of AI agent capabilities in software engineering, from simple tab completion to full autonomous development, and what this rapid evolution means for the future of programming.

Discover how Pydantic AI enables developers to build reliable, type-safe AI applications with structured data validation and agent-based architectures.

Master Claude Code's revolutionary Custom Subagents feature to create specialized AI assistants for performance analysis, code optimization, and automated workflows in your development projects.

A comprehensive guide to implementing robust evaluation systems for AI applications, covering data strategies, adaptive frameworks, and scaling methodologies for modern LLM-based systems.

Alibaba releases Qwen 3 Coder, a groundbreaking 480B parameter open-source coding AI that rivals Claude Sonnet and GPT-4 on coding benchmarks.

A comprehensive comparison of Alibaba's Qwen3-Coder and Kimi K2 on production codebases, revealing where benchmarks don't tell the full story.

Alibaba's Qwen 3-235B-A22B-2507 emerges as a groundbreaking open-source language model with 235B parameters, outperforming competitors like Claude Opus and GPT-4.1 across coding, reasoning, and general tasks.

Learn how to build reliable AI agents using reinforcement learning through a comprehensive case study that achieved 94% accuracy while reducing costs by 90%.

Explore Google's groundbreaking Thinking architecture in Gemini, which enables dynamic test-time computation scaling for dramatically improved reasoning capabilities across complex problem-solving tasks.

Explore the explosive growth of generative media technology and its impact on advertising, e-commerce, and video creation as AI-powered content generation approaches zero marginal cost.

A comprehensive comparison of Kimi K2 and Claude Sonnet for production coding tasks, revealing why cheaper AI models don't always deliver better results.

Discover how Make It Heavy recreates Grok 4's expensive heavy variant functionality using multiple AI agents working simultaneously on complex tasks, completely free and open-source.

Learn how to master context engineering for AI agents through four key strategies: writing, selecting, compressing, and isolating context to optimize agent performance and manage token limitations effectively.

Learn how to build winning AI products by combining unique data, functionality, and customer insights to create competitive advantage in today's hyper-competitive landscape.

A comprehensive analysis of four key AI frontiers in 2024: reasoning models, open weights, cost efficiency, and speed performance, revealing critical trade-offs for developers and enterprises.

Learn how Zapier transforms AI agent failures into features using data-driven evaluation frameworks, feedback loops, and strategic testing approaches.

Discover how to replace manual prompt crafting with automated optimization systems that use evaluators and AI agents to achieve 5x better performance without traditional prompt engineering.

An in-depth analysis of the most significant large language model releases and developments from the past six months, exploring performance, capabilities, and industry trends.

Explore the common deployment headaches developers face with AI agents and discover infrastructure solutions that enable stateful, long-running agent deployments beyond traditional serverless limitations.

Learn proven strategies for implementing Model Context Protocol (MCP) clients in large organizations, including centralized gateways, authentication patterns, and standardized integration approaches.

Learn how Strands, an open-source SDK from AWS, simplifies AI agent development to just models and tools, eliminating complex scaffolding and enabling rapid deployment.

Learn the architectural patterns and engineering principles that transform experimental AI agents into reliable, production-grade software systems.

Learn the essential principles for building production-ready AI agents that move beyond simple prompt-and-loop patterns to create truly reliable LLM-powered software.

Discover more insights across our content categories

Latest insights on AI automation, process optimization, and intelligent workflows

Security frameworks, authentication protocols, and risk management strategies for protecting digital assets and enterprise systems.

Digital transformation, marketing strategies, and business growth

Cloud hosting, server management, and infrastructure optimization

Comprehensive guides for optimizing system performance, benchmarking methodologies, and performance testing best practices for modern applications.

Cybersecurity threats, protection strategies, and best practices

Latest insights, tools, and best practices for modern software development workflows and methodologies.

Modern web development practices, frameworks, and technologies

Ready to implement the solutions discussed in these articles? Let's discuss your project.

Get Consultation