Manual prompt engineering has become an outdated practice in modern AI development. Instead of manually crafting and tweaking prompts through trial and error, developers can now build automated systems that optimize prompts systematically, achieving significantly better results with less effort.

The Problem with Traditional Prompt Engineering

Traditional prompt engineering involves manually adjusting prompts, asking language models to 'act nicely' and perform specific tasks. This approach is time-intensive, inconsistent, and doesn't scale effectively. Developers often find themselves in endless loops of tweaking instructions without a systematic way to measure improvement.

Consider a typical RAG (Retrieval-Augmented Generation) chatbot implementation. Initially, it might work adequately but suffer from common issues:

- Answering questions outside its intended scope

- Providing less useful responses than expected

- Making frequent mistakes in generated content

- Lacking consistency in response quality

Building an Automated Prompt Optimization System

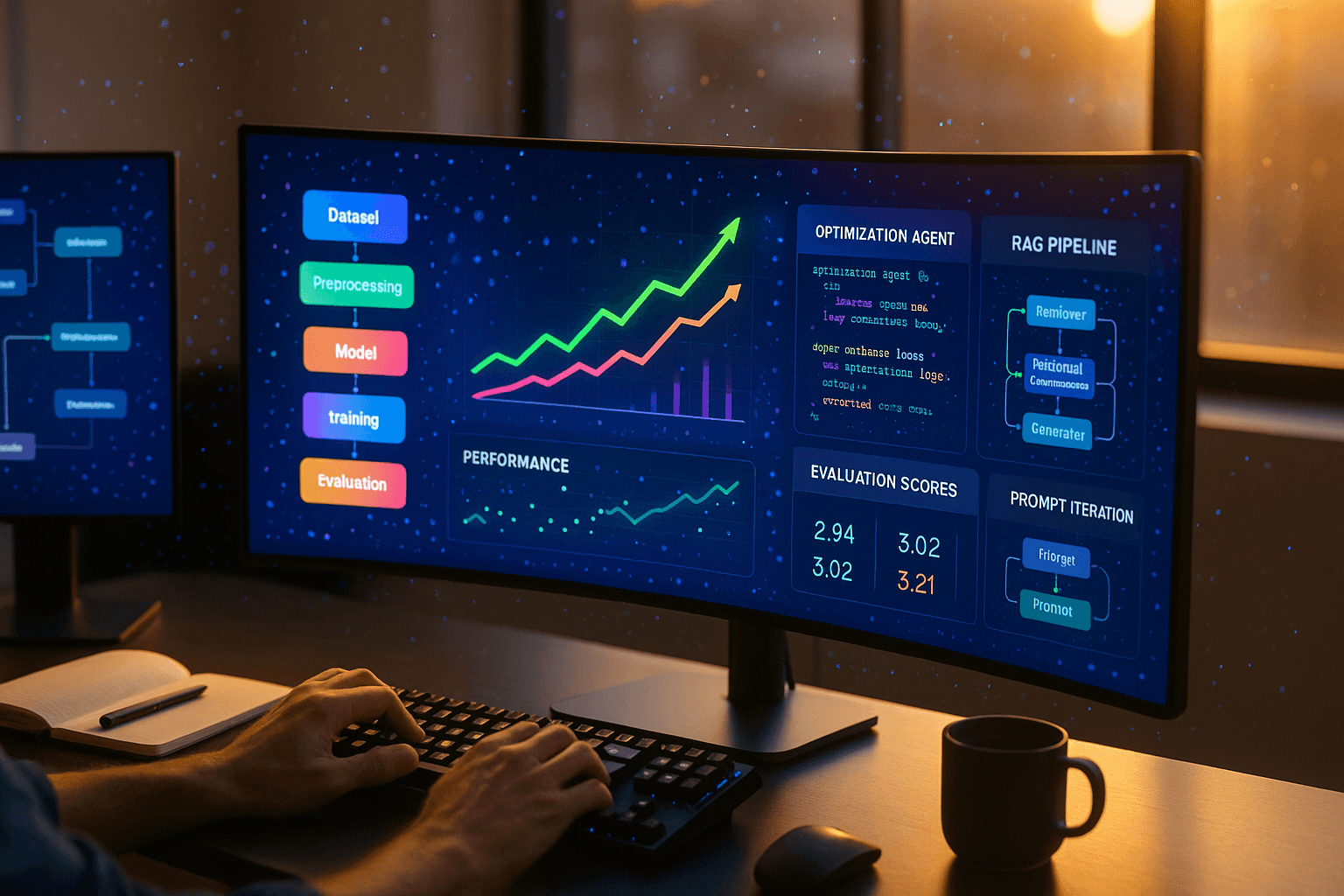

The solution lies in creating an automated system with three core components: a baseline implementation, an evaluation framework, and an optimization agent.

Component 1: The Baseline RAG Pipeline

Start with a simple RAG implementation using:

- A vector database (such as Chroma) for document storage

- OpenAI API for language model interactions

- Basic prompts that retrieve relevant documents and generate answers

This baseline serves as the foundation for systematic improvement. The initial implementation might use straightforward prompts like asking the model to find relevant documents and provide answers based on retrieved context.

Component 2: Evaluation Framework

The evaluation system forms the cornerstone of automated optimization. Without proper evaluation, there's no way to measure improvement or guide the optimization process.

Evaluation Approaches:

- LLM as Judge: Uses another language model to evaluate responses without requiring ground truth data

- Classic NLP Metrics: Traditional metrics that compare outputs against known correct answers

- Embedding-based Evaluation: Measures semantic similarity between expected and actual responses

For most applications, LLM-as-judge evaluation provides the best balance of flexibility and ease of implementation. This approach can assess answers based on context and questions without requiring pre-written ground truth responses.

Component 3: Optimization Agent

The optimization agent automates the prompt improvement process by:

- Researching current prompt engineering best practices online

- Running initial evaluations to establish baseline performance

- Analyzing failure reasons from evaluation results

- Generating improved prompts based on research and failure analysis

- Testing new prompts and measuring performance improvements

- Iterating until satisfactory results are achieved

Implementation Strategy

Creating an effective automated prompt optimization system requires careful planning and execution.

Dataset Creation

Develop a comprehensive evaluation dataset containing:

- Representative questions for your use case

- Expected facts or elements that should appear in correct answers

- Edge cases and challenging scenarios

- Diverse question types and complexity levels

For example, if building a documentation chatbot, create 20-30 questions covering different aspects of your documentation, with 2-3 key facts that should appear in each answer.

Evaluation Methodology

Implement a scoring system that provides actionable feedback:

def evaluate_response(question, answer, expected_facts):

score = 0

feedback = []

for fact in expected_facts:

if fact_present_in_answer(fact, answer):

score += 1

else:

feedback.append(f'Missing fact: {fact}')

return score / len(expected_facts), feedbackThis approach provides both numerical scores for optimization and specific feedback for improvement.

Agent Implementation

Build the optimization agent using frameworks like CrewAI or LangChain. The agent should:

- Conduct web research on current prompt engineering techniques

- Analyze evaluation failures to identify improvement opportunities

- Generate new prompts incorporating best practices and addressing specific failures

- Test improvements systematically

Results and Performance Gains

Automated prompt optimization can deliver significant improvements over manual approaches. In practice, this methodology can achieve:

- 5x better performance compared to initial manual prompts

- Scores improving from 0.4 to 0.9 (representing 90% accuracy) in just two iterations

- Comprehensive prompts that incorporate industry best practices automatically

- Consistent improvement without manual intervention

The generated prompts often include sophisticated instructions that would take considerable manual effort to develop, such as detailed role definitions, specific response guidelines, and edge case handling.

Advanced Considerations

Avoiding Overfitting

Like traditional machine learning, automated prompt optimization can overfit to evaluation data. Prevent this by:

- Using larger, more diverse evaluation datasets

- Implementing train/validation/test splits

- Testing optimized prompts on completely new examples

- Regularly updating evaluation criteria

Meta-Optimization Opportunities

The optimization approach can be applied recursively:

- Optimize evaluator prompts for better assessment quality

- Improve agent prompts for more effective optimization

- Create self-improving systems that enhance their own optimization capabilities

Implementation Tools and Resources

Several tools and frameworks facilitate automated prompt optimization:

- CrewAI: Multi-agent framework for building optimization agents

- LangChain: Comprehensive toolkit for LLM applications

- OpenTelemetry: Observability for monitoring optimization progress

- Vector databases: Chroma, Pinecone, or Weaviate for document storage

Best Practices for Implementation

Successful automated prompt optimization requires attention to several key practices:

- Start Simple: Begin with basic evaluation criteria and gradually increase complexity

- Measure Everything: Track not just final scores but also iteration progress and failure patterns

- Version Control: Maintain records of prompt versions and their performance

- Human Oversight: Review generated prompts for quality and appropriateness

- Continuous Monitoring: Regularly evaluate performance on new data

Conclusion

Manual prompt engineering represents an outdated approach to AI system development. By implementing automated optimization systems with proper evaluation frameworks and intelligent agents, developers can achieve superior results with greater consistency and less manual effort.

The key insight is treating prompt optimization as an engineering problem rather than an art form. With systematic evaluation, automated research, and iterative improvement, AI systems can optimize themselves more effectively than human engineers manually crafting prompts.

This approach scales better, produces more consistent results, and frees developers to focus on higher-level system design rather than low-level prompt tweaking. The future of prompt development lies in building systems that improve themselves automatically, guided by objective evaluation and systematic optimization strategies.